Introduction#

Overview#

What is Machine Learning?

One snarky answer and three increasingly useful ones!

Different classes of ML algorithms

What is Deep Learning?

ML for the GeoSciences

Ethical machine learning

*Roughly following Chapter 1 of UDL

What is machine learning?#

*XKCD 1838

What is machine learning?#

Machine learning can also be thought of as high-dimensional statistics. We are looking to distill key features and information directly from the data. “Machine Learning: A probabilistic perspective” by Kevin Murphy is a great source for this point of view.

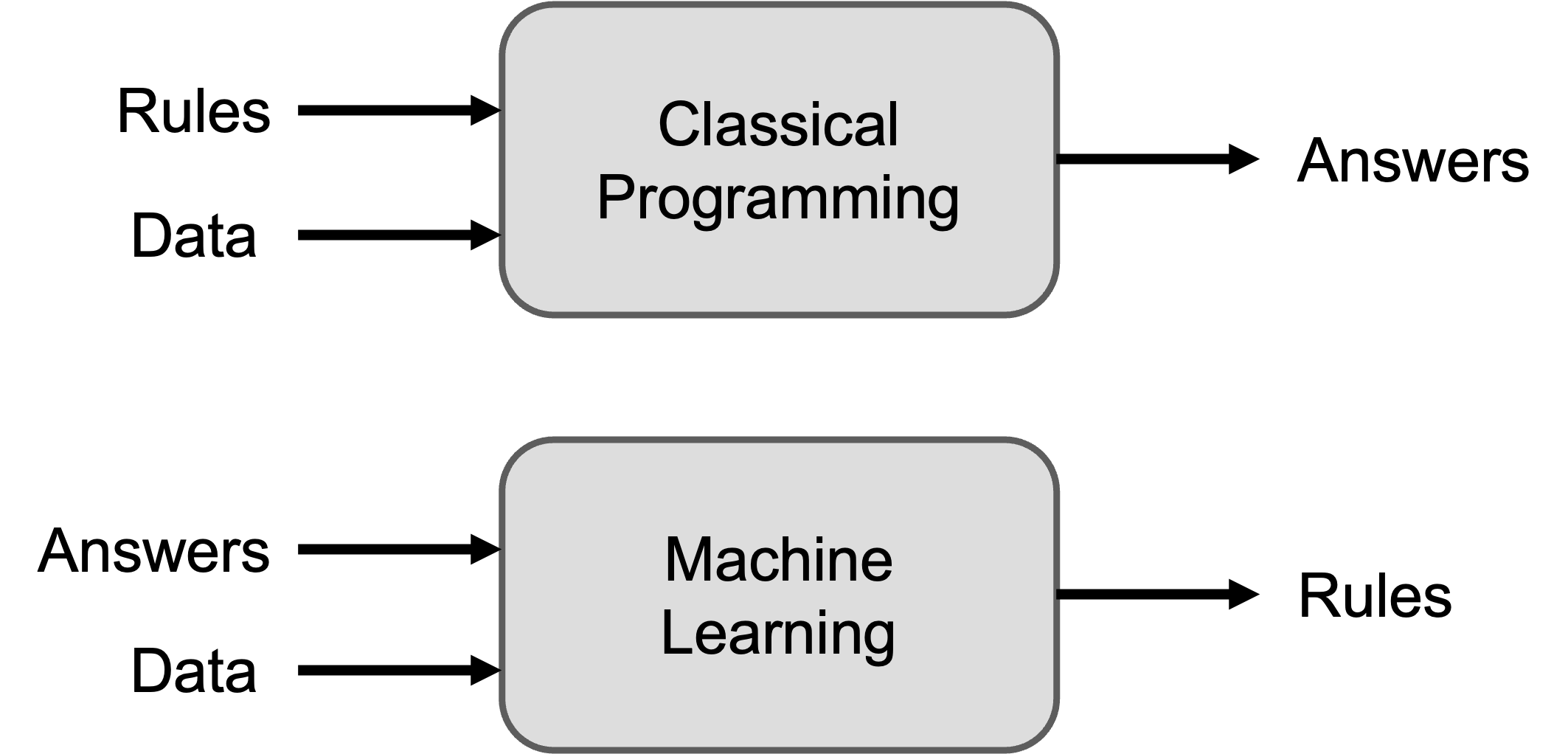

What is machine learning?#

This is true for science as well as programming. Rather than positing a functional form based on a scientific hypothesis, we provide ‘answers/labels’ along with our data in order to discern rules.

What is machine learning?#

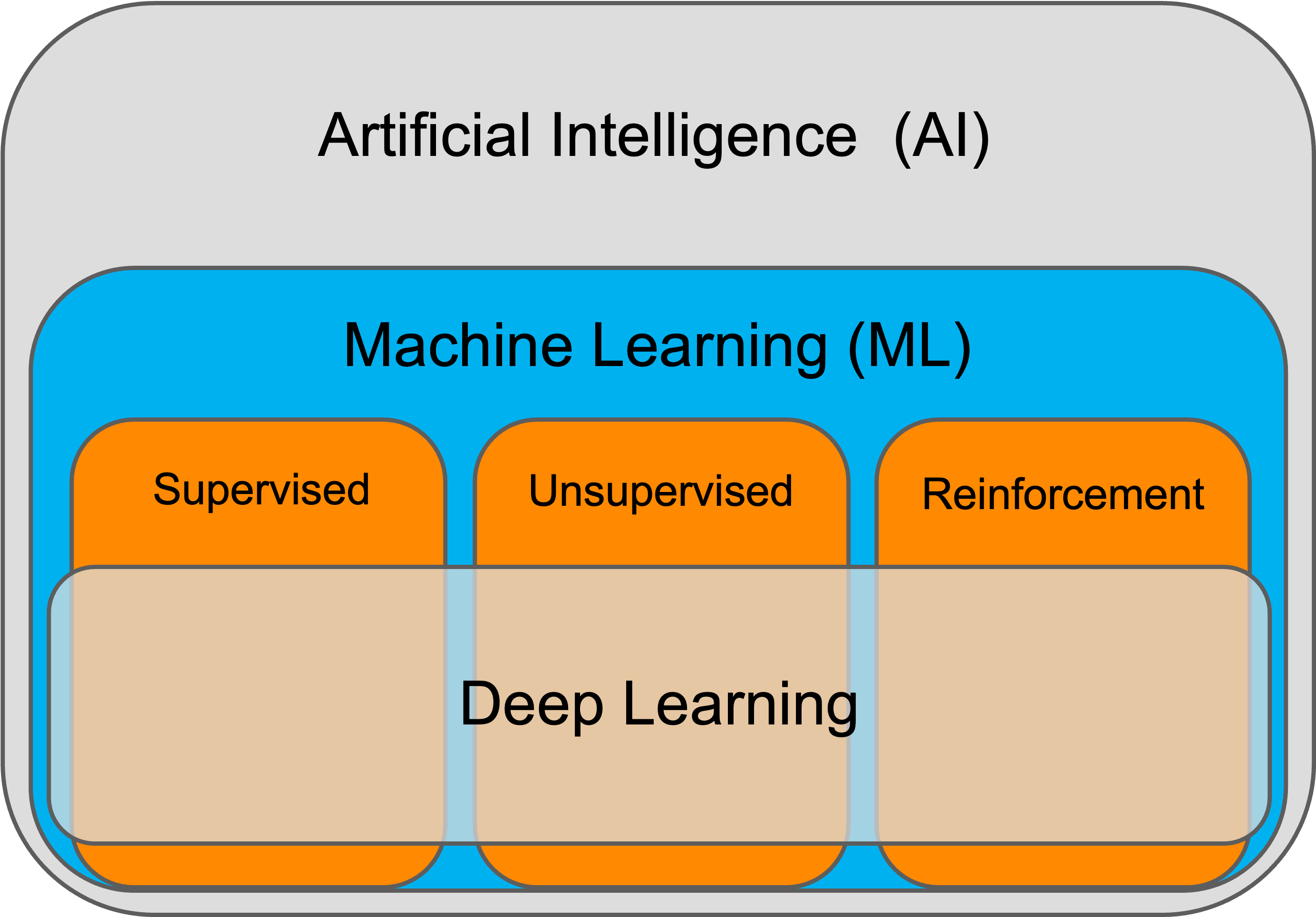

Machine learning is a subset of the broader field of Artificial Intelligence first imagined by Alan Turing. It ecompases a range of different tasks, and at present represents the majority of AI research.

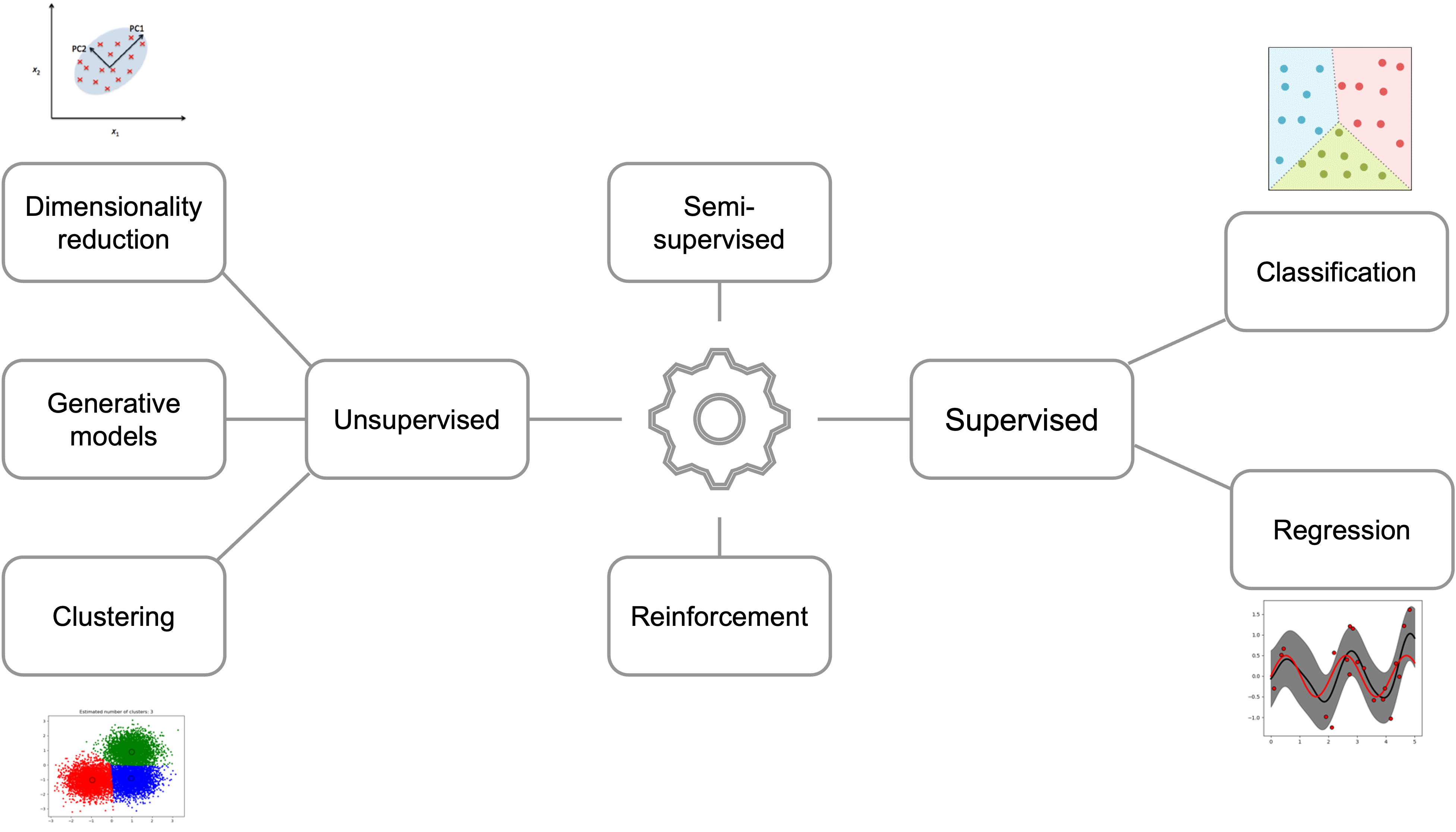

Different classes of ML#

In addition, supervised learning can include object detection and image segmentation. Unsupervised learning also includes self-supervised learning in which we use inductive biases to encourage certain desirable behaviours.

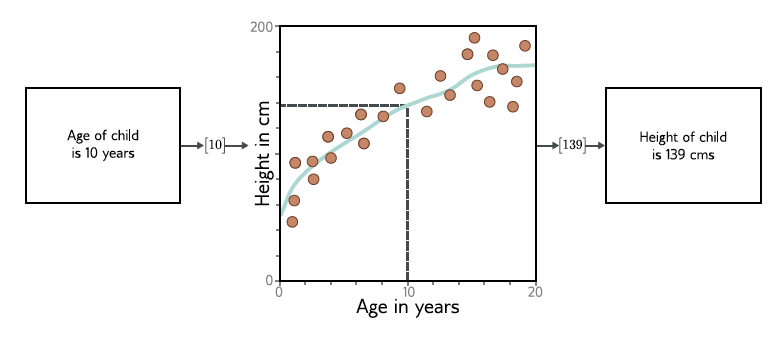

Supervised techniques#

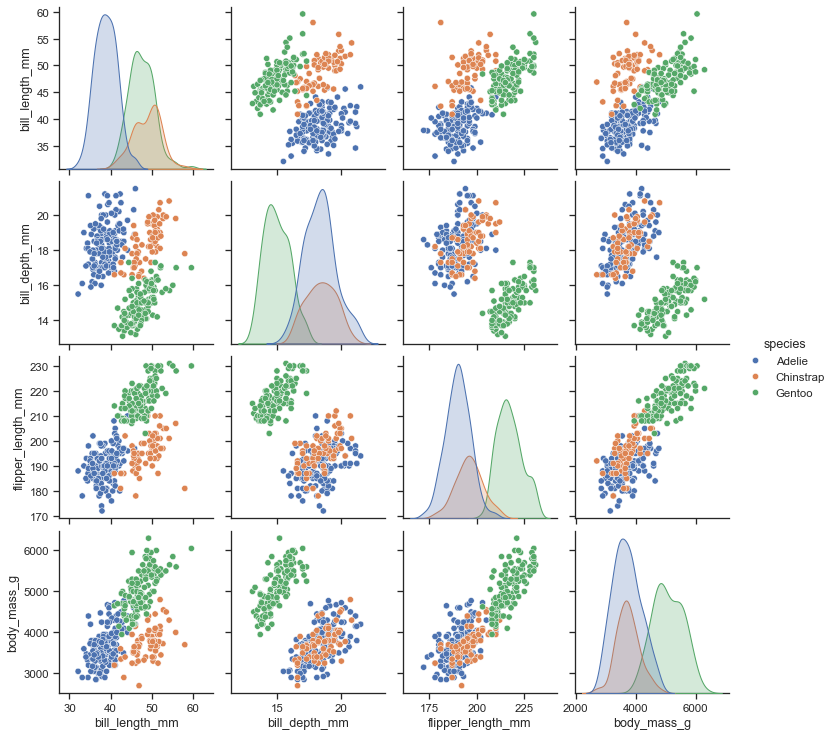

In its simplest form supervised learning involves learning a mapping from input data to output labels. This can be a regression problem, where we predict a continuous value, or a classification problem, where we predict a discrete value.

Supervised techniques#

Supervised techniques#

Regression and classification share a common toolset:

Linear models (including LASSO, ridge, SVM etc)

Decision tree and ensemble based

Gaussian process

Neural network (NN)

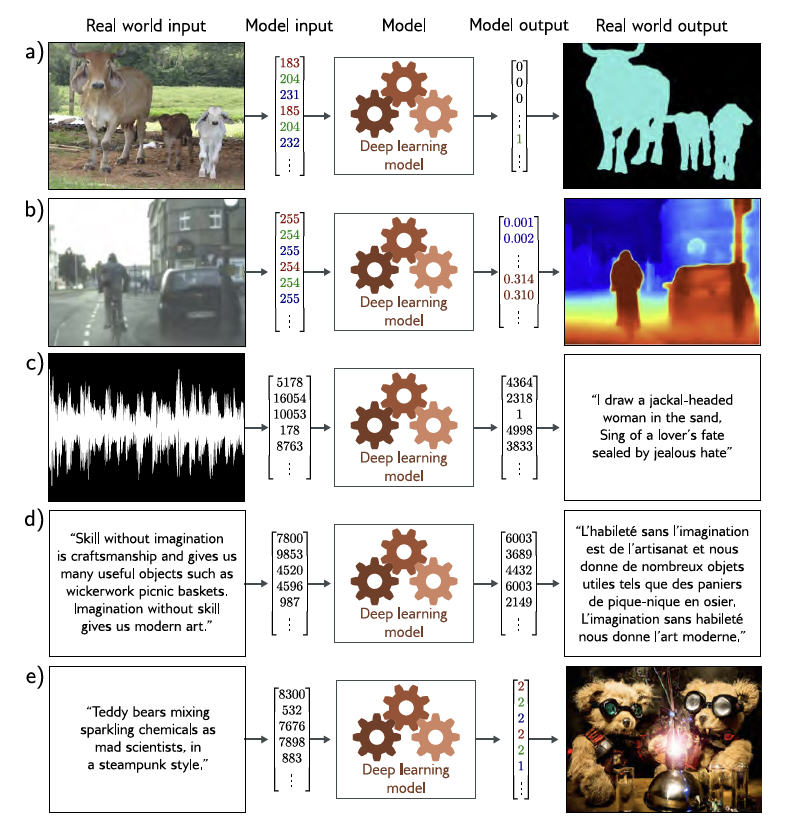

Image detection / segmentation can be done using ‘traditional’ image processing techniques such as edge-finding and watershedding, but are increasingly primarily done with Deep NNs.

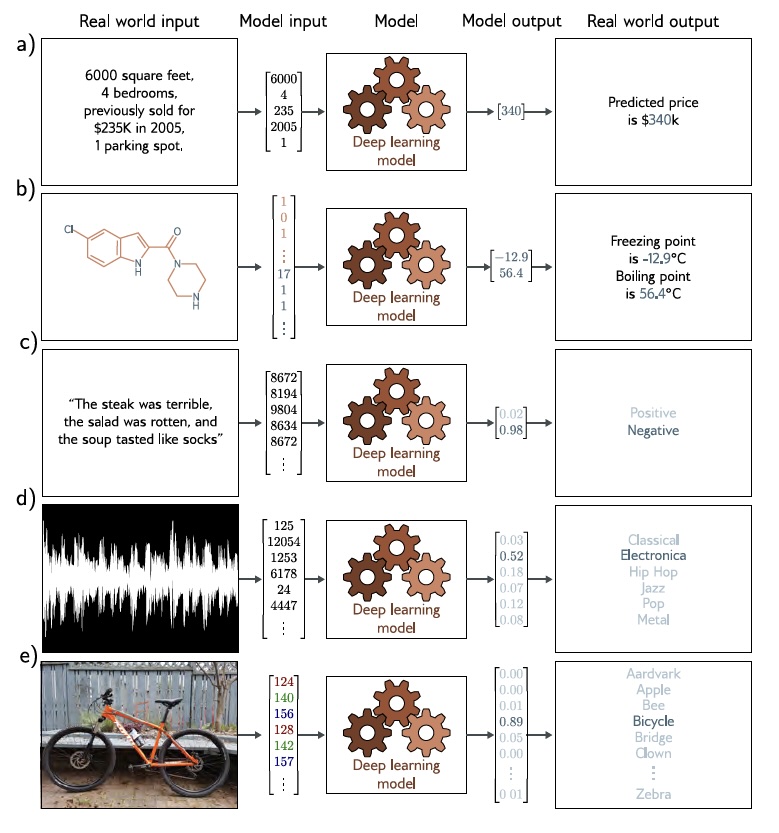

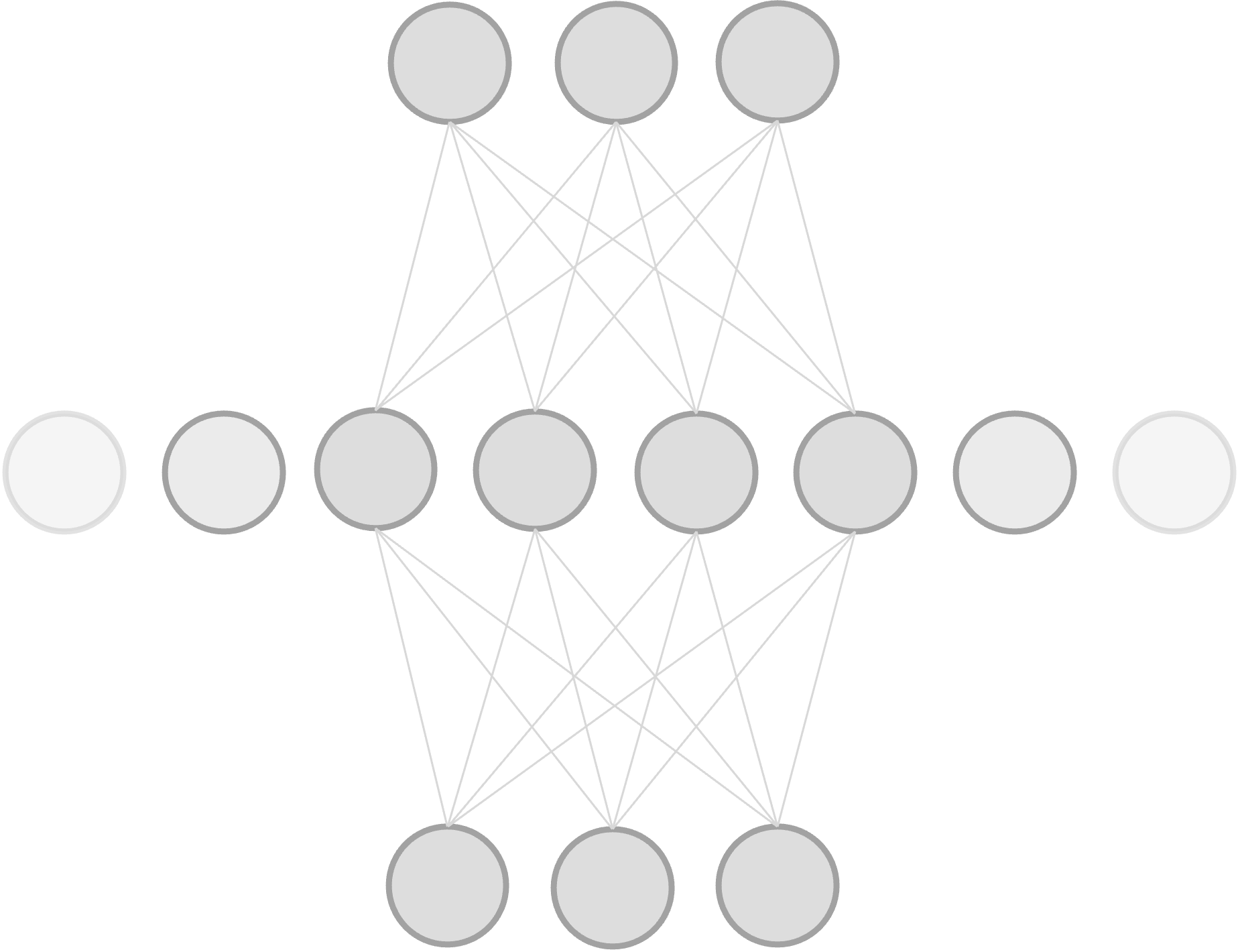

Supervised techniques - Deep Learning#

These deep learning approaches allow much more complex relationships to be learned in much higher dimensional data, but require a lot more data and computational resources.

Unsupervised techniques - Clustering#

Clustering is a classical problem with many possible approaches depending on the dataset size and dimensionality:

k-means

DBSCAN

Gaussian mixture models

Aglomorotive clustering

Unsupervised techniques - dimensionality reduction#

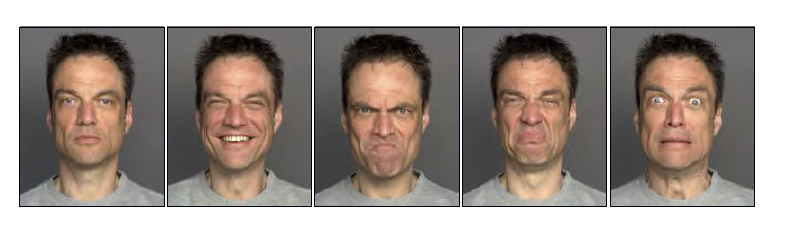

Many real-life systems exhibit high-dimensional behavior, but the underlying dynamics are often much lower - including the human face. It’s possible to describe most of the variations in any face with just a few dozen numbers

Unsupervised techniques - dimensionality reduction#

Many dimensionality reduction techniques exist:

Principle component analysis (PCA) / empirical orthogonal functions (EOF)

Kernel PCA

Density estimation

Gaussian mixture models

Unsupervised techniques - dimensionality reduction#

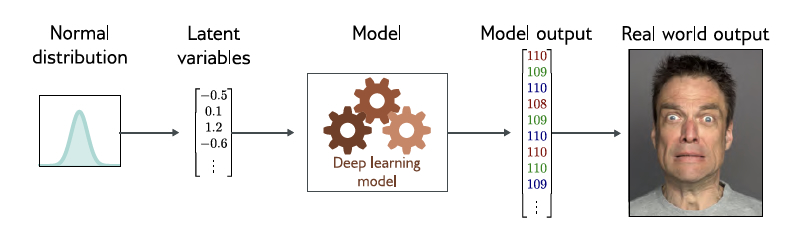

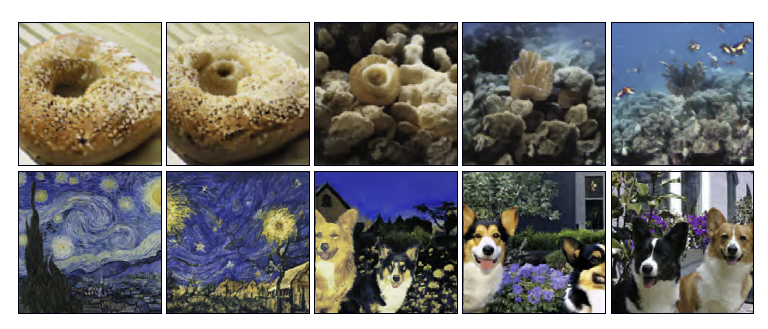

(Deep) NNs provide many other ways of learning underlying symetries in data, including self-supervised and semi-supervised approaches. If such symetries are learnt probabilistically then we call sampling from those models ‘generative’.

Generative models#

It turns out that many real-world datasets exhibit this lower intrinsic dimensionality, and that the underlying symetries can be effectively learnt by a Deep NN. Jointly learning these symetries with associated textual context (using e.g. CLIP) is the secret behind text-to-image generation.

Generative models#

When constructed in a certain way, it is possible to interpolate between different samples in the latent space of the model (more on this later). This allows for the generation of new samples that are not in the training set - and some funky images!

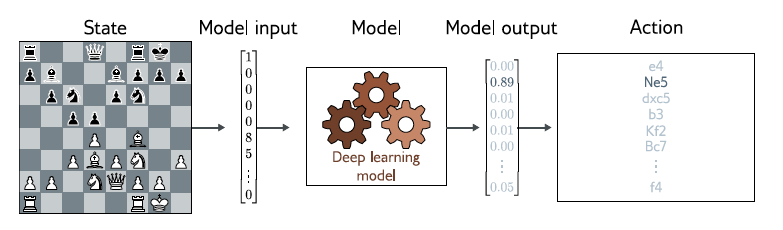

Reinforcement learning#

Reinforcement learning is somewhat different - it aims to teach an agent how to behave in a dynamic environment to maximise some abstract, or delayed, reward.

From Wikipedia:

It differs from supervised learning in not needing labelled input/output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge) with the goal of maximizing the long term reward, whose feedback might be incomplete or delayed.

Reinforcement learning#

These approaches are what underpin the success in playing Chess, Go and other games and seen as promising approaches for real world agents and robots, but training can be very fiddly.

What is deep learning?#

The phrase ‘Deep learning’ relates to the use of deep neural networks (10-100 layers) for a given machine learning task. It has become synonymous with many aspects of machine learning because of these models flexibility and performance across a wide range of tasks.

In this course we will mostly focus on these models because of their flexibility, and some of the unique challenges in training and using them in scientific applications

Why use deep networks?#

The universal approximation theorem tells us that any continuous 1D function can be approximated by an infinitely wide shallow Neural Network. So why go deeper?

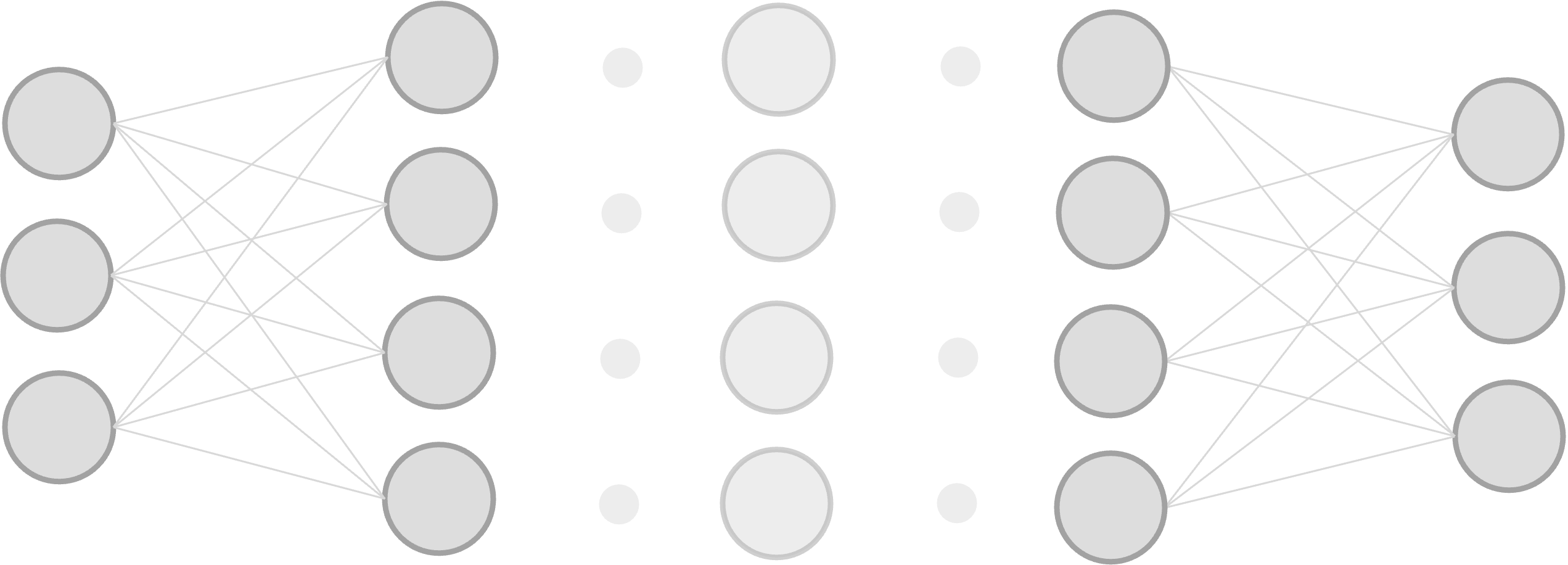

Why use deep networks?#

While shallow NNs can be very effective regressors, it turns out that they can be impractical for some functions - requiring enourmous numbers of hidden units.

In fact, some functions can be modelled much more efficiently by increasing the number of layers, rather than the width of the layers - particularly those with large numbers of inputs

Note, we won’t actually draw out deep networks like this because drawing all the links becomes very tedious! Rather we schematically draw out the structure with the main focus being representing the shape of the matrices in each layer.

Why use deep networks?#

Historically it was challenging to train deep networks because of the relatively larger number of parameters required to get good results. GPUs made this much less of a problem

They became especially prevalent in modelling imagery because of the high dimensional input space, and the advent of convolutional layers which encode powerful inductive biases (even without training). We’ll discuss these more later in the course

Practically it has been found that deep networks both train more easily, and generalize better than shallow ones. This is likely due to over-parameterization, but is not well understood and we’ll also return to the topic at the end of the course

Why use deep networks?#

More prosaicly, they work! Deep NNs are the core of almost all of the most recent successes in AI and machine learning.

While the theoretical understanding is not as mature for these structures they have repeatadly demonstrated their utility in a wide variety of tasks.

Hence, constructing and working with these models is often more akin to engineering than science. Which is why this course will focus on hands-on experience over theoretical underpinning

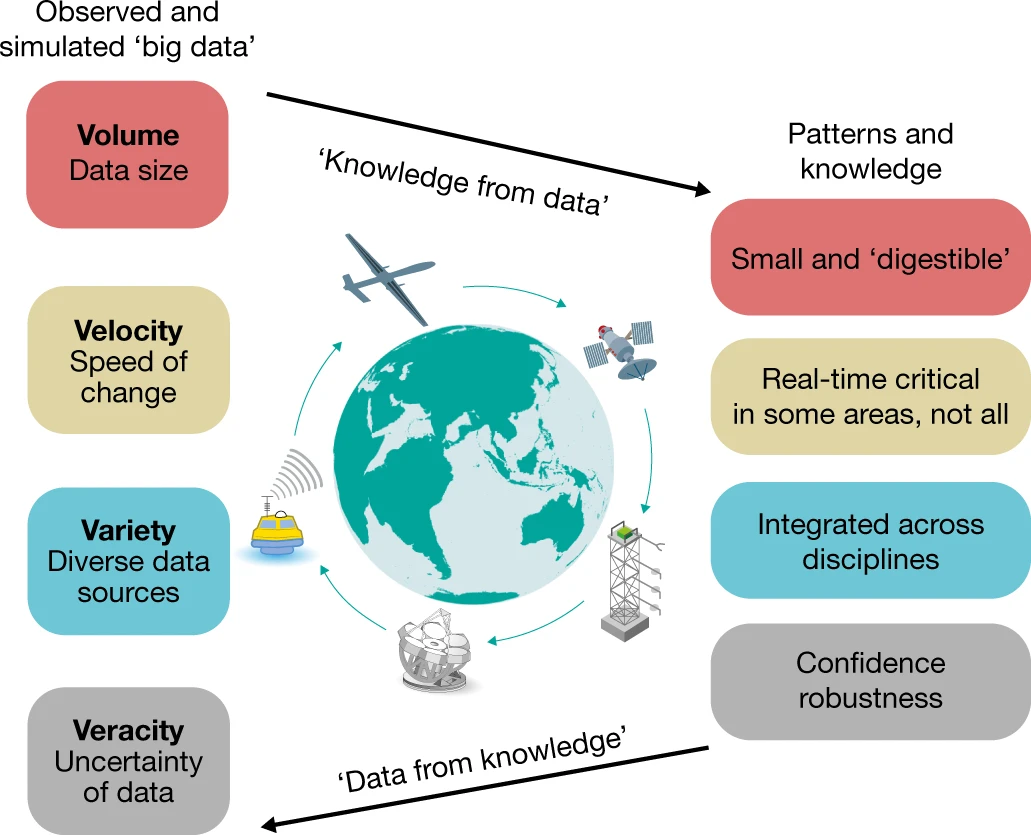

Machine Learning in Environmental and Geo-sciences#

The environmental and geo sciences are well poised to leverage these advances

Climate - Ocean - Atmosphere Program (COAP)#

This is the section I know best, but also seems to be at the forefront currently, at least in applying big deep learning models.

Examples include:

Data-driven weather models that beat the state-of-the-art physical models

New ML parameterizations that allow representation of more detailed physical processes

Large and broad efforts to better leverage large volumes of remote sensing data

Promising new approaches for assimilating these observations into hybrid-physical models

Some relevant literature:

https://royalsocietypublishing.org/toc/rsta/2021/379/2194

https://iopscience.iop.org/article/10.1088/1748-9326/ab4e55

https://www.nature.com/articles/s41586-019-0912-1#Sec17

+One of the other big review papers and some specific examples

Climate - Ocean - Atmosphere Program (COAP)#

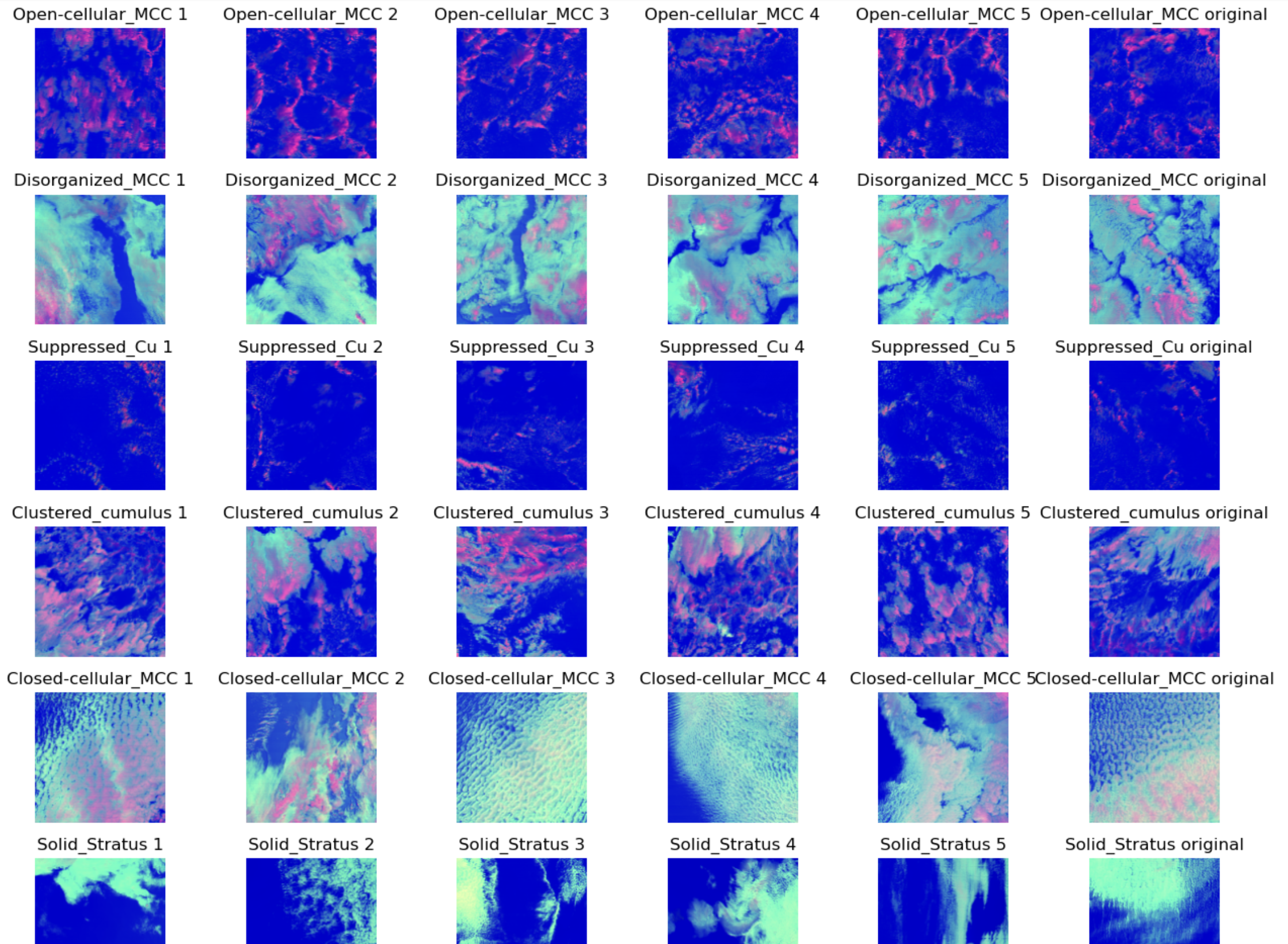

Later in the course we will reproduce and explore ClimateBench - a climate model emulation benchmark.

We will also look at techniques for unsupervised learning of satellite imagery

Geosciences of the Earth, Oceans and Planets (GEO)#

Probably the most statistically literate field, Geo has been leverage ML approaches for some time (and invented Gaussian process regression!) and there have been plenty of applications of these approaches to date:

High resolution subsurface structure using active seismic sources in exploration geophysics

Pioneered the development of Physics Informed Neural Networks (PINNs)

Microearthquake detection, and earthquake early warning?

Large spatial scale remote sensing imagery classification

What did I miss?!

https://geo-smart.github.io/usecases

https://www.science.org/doi/10.1126/science.abm4470

https://www.science.org/doi/10.1126/sciadv.1700578

https://arxiv.org/abs/2006.11894

https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2021RG000742

Ocean Biosciences Program (OBP)#

Again, lots of potential applications in this data-rich field:

Detection and classification of ocean life at all scales in remote and sub-surface imagery

Improved modelling and network analysis

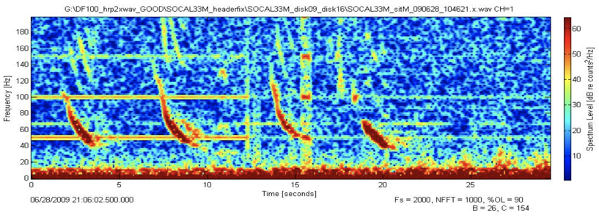

New opportunities to harness large volumes of acoustic data

We will explore this last example in detail later in the course - applying CNNs to the detection of whale song just off the coast of California

And to the detection of Plankton in under-water imagery:

https://spo.nmfs.noaa.gov/sites/default/files/TMSPO199_0.pdf

https://ieeexplore.ieee.org/abstract/document/7404607

Michaela’s paper?

Ask for examples from the students

Ethics#

Machine learning is already a powerful tool, and could potentially become as important as the steam engine and elecrictiy, so it is important to consider the ethical implications of its use.

Bias and Fairness

Explainability

Privacy and Transparency

Concentrating Power

Existential Risk

Bias and Fairness#

Bias refers to the systematic errors or prejudices that can be present in the data used to train machine learning models. It can lead to biased predictions and unfair outcomes, perpetuating and potentially exacerbating existing inequalities and biases.

Fairness is the concept of ensuring that machine learning models do not discriminate against individuals or groups based on protected attributes such as race, gender, or age.

Fairness in machine learning requires careful consideration of the data used for training, the features selected, and the algorithms employed.

Techniques such as data preprocessing, feature engineering, and algorithmic adjustments can be used to mitigate bias and promote fairness.

Privacy and Transparency#

Transparency and accountability are key in promoting fairness and addressing bias in machine learning systems.

Especially when deployed in public functions transparency is crucial. The data that was used to train the model, and the way the model is used to make decisions should be clear and understandable to the people affected by it.

Another important ethical consideration in machine learning is privacy. Machine learning models can be trained on sensitive data, such as personal information or medical records, which raises concerns about data privacy and security.

Ongoing research and collaboration are essential to develop best practices and guidelines for addressing bias and fairness in machine learning.

Explainability#

Explainability is a critical aspect of machine learning models, especially in domains where decisions have significant consequences. It refers to the ability to understand and interpret the reasoning behind a model’s predictions or decisions.

Explainable models build trust and confidence in the predictions made by the model. Users are more likely to accept and adopt a model if they can understand how it arrives at its conclusions.

Complexity: As models become more complex, their explainability decreases. Deep learning models, for example, are often considered black boxes due to their intricate architectures. There is often a trade-off between model performance and explainability.

Research is ongoing to develop more robust and reliable techniques for explainability in machine learning.

Concentrating Power#

Large technology companies have invested a huge amount of money in developing the latest machine learning models, but it’s not altruistic. They are looking to leverage these models to increase their profits and market share.

Even the latest efforts for ML climate and weather models are primarily driven by buisness interests and it’s important to consider the risk of concentrating power in the hands of a few companies.

The same can be said about large physical climate models, but at least there is a large community of researchers and a lot of transparency in the process.

Ideally ML emulators can democratize access to these models, but it’s important to consider the potential for misuse and abuse of these technologies.